Generative AI for students

Generative Artificial Intelligence (AI), explained below, has the potential to change the way we learn, but it also has pitfalls. Unless you are explicitly asked to use it in your module, you are not required to use Generative AI for your studies

This document provides general guidance on how to use Generative AI responsibly and effectively. The key points are:

- You may use Generative AI to support your learning.

- Check your module’s Assessment tab to find out if you may use Generative AI as part of assessment and, if so, how to acknowledge its use.

- If you have shared with the university that you have a disability, you may use Generative AI as a reasonable adjustment, provided its use aligns with the assessment’s intended learning outcomes and does not compromise academic integrity.

- You must not provide confidential or personal information about any individual or organisation, including yourself and the OU, to any Generative AI tool.

- You must not put OU materials into AI tools except Microsoft Copilot in protected mode or into a tool provided to you as a reasonable adjustment for your disability.

- Be aware that the outputs of Generative AI tools may be incorrect, biased, incomplete or irrelevant.

- Dependence on or inappropriate use of Generative AI will prevent you from developing the depth of understanding and skills you need for further study and for employment

These points are elaborated in the rest of this document.

Your module and qualification websites may provide more specific guidance.

If you have not come across Generative AI before, you may wish to start with the Generative AI Pathway in the OU Library’s ‘Being Digital’ collection.

Generative AI tools generate text, images, videos or audio in response to user prompts. They do so in a similar, but far more sophisticated, way to predictive text in messaging apps, based on patterns detected in the vast collections of materials that these tools were trained on. Generative AI tools typically do not rely on databases of facts, and they do not have lived experience, logical reasoning, a sense of moral right or wrong, or understanding of the meaning of words, images and sounds.

The way Generative AI works has raised various concerns, including:

- Environmental impact: Training and using AI involves many computations, which require lots of electricity and water (to cool the servers).

- Copyright: There are legal cases regarding the training of systems on materials without the consent of their authors

- Labour: Training often involves low-paid workers filtering graphic and disturbing content, which leads to mental health issues.

- Lack of replicability: You may get, for the same prompt and tool, different answers at different times.

- Errors: Answers sound authoritative and plausible but may be inaccurate, irrelevant or inconsistent.

- Bias: Answers may reflect (and reinforce) biases that exist in the training materials.

Generative AI should be used judiciously. The last section of this document asks you to reflect on your use of Generative AI.

1.1 How can I use Generative AI tools?

Most AI tools are accessible via your web browser. While many include paid-for features, the freely available features are enough to support your OU study, should you decide to use them. Typically, these features include the following:

- You can type a prompt or dictate it into your computer’s microphone.

- You can upload files to the tool, e.g. by attaching them to a prompt.

- You can have the tool’s response read aloud to you.

- You can copy the tool’s response to the clipboard.

- You can ask the tool to generate a different response.

- You can have a conversation with the tool to refine the response to your original prompt

- You can have multiple conversations going on in parallel. The tool keeps a record of all of them, so that you can continue them later. You must explicitly delete a conversation you don’t want to keep.

- You can have conversations in languages other than English.

Several browser-based tools can also generate and explain computer code in Python, Java and other programming languages, but specialised tools that must be installed in a programming environment may be better for that purpose. In addition, many other tools, like Microsoft Word, Grammarly and the Adobe Creative Cloud Applications, are incorporating Generative AI features. The variety and capability of AI tools is evolving rapidly.

1.2 What Generative AI tools can I use?

You can use any Generative AI tool you wish, subject to the instructions in Section ‘Using Generative AI safely’ and on your module’s website.

The most popular in-browser tools include OpenAI’s ChatGPT, Google’s Gemini, Anthropic’s Claude, and Microsoft’s Copilot. The most popular tool for programming environments is GitHub Copilot. All tools require you to create or use an existing account, so that they can save your conversations. Gemini and Microsoft Copilot have the advantage of using their parent company’s search engines to retrieve web pages related to the AI answer, to help you evaluate its accuracy.

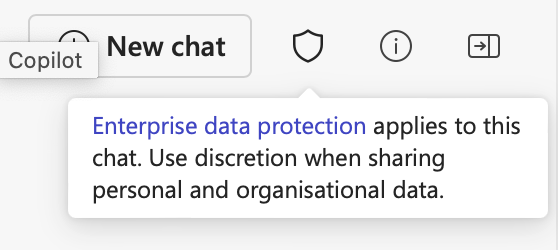

The only safe tool (from a copyright perspective) for OU study is Microsoft Copilot in protected mode, as it ensures that data stays within the OU and is not used for training, and that Microsoft won’t claim copyright on the generated outputs. You can access Copilot in various ways, e.g. at https://m365.cloud.microsoft/chat or in the Edge browser. Make sure you log in with your ou.ac.uk account and check that there is a shield. Hovering over it should show that enterprise data protection applies

Nevertheless, you must not put personal and organisational data into Copilot (see the next section).

If no tool is suggested for the module or subject you are studying, you may wish to try at least two different tools with the kind of prompts given below, to understand their strengths and weaknesses. Remember that only Microsoft Copilot provides added protection when logged in as an OU student.

The legal framework around Generative AI is complicated and evolving. You should assume any material (text, image, video, audio) that you find on the web to be under copyright unless all its creators have died over 100 years ago or the material includes an explicit statement of being in the public domain. Moreover, you should assume that AI providers will store and use any information you provide, even on a paid plan and even if you later delete your conversation, unless their terms and conditions say otherwise.

To use Generative AI in an ethical, legal and responsible way, make sure you:

a) Do not provide AI tools with any personal or confidential information, about yourself, the OU or any other individual or organisation. Personal information is any information relating to a living person, from which the person can be identified, either directly or indirectly (for example, through the use of identification numbers).

b) Do not provide AI tools with any copyrighted material by the OU or any other individual or organisation, unless:

- the third-party individual or organisation has given permission to do so

- you are instructed in your module to do so

- you are using Microsoft Copilot in protected mode

- you are using a tool provided to you as a reasonable adjustment for your disability

c) Do fully acknowledge any use of Generative AI in your answers to formative or summative assessment, if your module allows the use of AI in assessment. The section below on assessment has more details.

In points a) and b), providing AI tools with data includes the prompts you write, the files you upload, and any other access you grant to the tool. For example, in-browser chatbots may have access to the web pages you have currently open. Make sure you don’t open pages with sensitive information while the tool is operating. Check whether the tool you use has settings to control the data it has access to.

Regarding point a), as a general guide, do not enter names of living people into an AI tool unless they are public figures or have published work in their name.

Point b) means, for example, that other than for the listed exceptions, you cannot use AI tools to summarise parts of OU material or third-party articles, to explain figures and charts, or to clarify assessment questions, as all these cases would require copying or uploading copyrighted material to the AI tool. You can however upload your personal study notes to an AI tool and ask it to summarise, restructure and rephrase them, remembering that using AI-processed notes in assessment (if allowed by your module) must be acknowledged as per point c).

Note that points a) and c) also apply to Microsoft Copilot in protected mode.

Misuse of Generative AI falls under section 3.6 of the Academic Conduct Policy (if you are an undergraduate or postgraduate taught student) and sections 3 and 4 of the PGR Plagiarism and Research Misconduct Policy (if you are a postgraduate research student).

Subject to the above restrictions, you can use AI tools as much and as often as you wish to consolidate your learning, e.g. to obtain alternative explanations, to test yourself, to critique your work, to practise.

Here are some example prompts you may consider using:

- Explain … with examples.

- Ask me a multiple-choice question about … with 5 options.

- Ask me a fill-in-the-blanks question about … with 3 blanks.

- Critique the following with respect to correctness, completeness and clarity: …

- Let’s chat in advanced/beginner level French/German/Chinese. I’m a tourist going to the city centre, you’re a bus driver. Wait for me to start the conversation. Don’t correct me during the conversation. (This is followed by a conversation in the given language and at any time you can switch back to English and ask for a list of mistakes you made and their correction.)

In the last example, the AI tool will remember throughout the conversation the context you provided (the language, the tourist and driver roles, etc.) because AI tools build on the conversation so far to reply to the current prompt. This means that to avoid confusing the tool or having to repeat the context, it is best to keep each conversation to a single topic.

Being specific with your prompts can help the Generative AI tool provide a more relevant response. For example, here are some prompts with added specifications:

- Explain … to a second year Computing undergraduate who has not taken calculus.

- Rephrase the previous answer without using the concept of …

- Summarise the concept of … from the perspective of a first-year psychology student.

You may also use Generative AI to support a literature search and review but, as explained in Section ‘Understanding Generative AI’, due to the use of linguistic patterns over factual knowledge, bibliographic references and summaries may be fabricated. You may prefer to use specialised tools, like Consensus and Undermind, but keep in mind that no tool can replace your own reading of source materials.

Since answers may be wrong or biased, you must critically review the output of Generative AI tools. The following may help you do so:

- Read the OU materials before you use an AI tool and compare the facts provided.

- Use Gemini or Microsoft Copilot to retrieve related web pages and check the answer against them.

- Evaluate the tool’s output (and any retrieved pages) using the PROMPT method (Presentation, Relevance, Objectivity, Method, Provenance, and Timeliness).

- Discuss the tool’s output in a module forum.

- Attend (or watch the recording of) the OU Library training session on Exploring Generative AI: critical skills and ethical use.

Keep in mind that being able to critically review Generative AI output requires you to already have enough knowledge of a topic to decide if the material generated is valid and accurate.

More generally, make sure you use Generative AI to complement your study of OU materials and your interactions with peers and tutors, not to replace them.

While the use of Generative AI for learning is unrestricted, its use for each formative or summative assessment piece (e.g. a single question, or an MSc or PhD dissertation) falls into one of the following three categories. The category will be stated in the piece itself, in the assessment tab of your module site or in the research degree regulations. If no category is stated, you may assume it’s category 2 (you may use AI), but it’s best to check with your tutor or supervisor.

Category 1: You cannot use Generative AI to complete an assessment piece.

For assessments in this category, you must not make any use of Generative AI. This category typically applies when the use of AI tools would prevent you from acquiring, practising and demonstrating basic knowledge and skills (including the application of concepts, developing arguments and following procedures) needed for your study and employment. Using Generative AI for this category is subject to academic conduct procedures.

Category 2: You may use Generative AI to assist you in completing an assessment piece.

For assessments in this category, you are allowed (but not required) to use Generative AI. The assessment piece or the module website may state which uses are allowed and which aren’t. In this category, making use of Generative AI in ways that are not allowed or not declaring its use is subject to academic conduct procedures.

Although this category allows using Generative AI, you should avoid relying too much on it, as this will prevent you from developing the depth of understanding and the higher-level skills (concise and clear oral and written communication, critical thinking, problem-solving, creativity, etc.) needed for studying subsequent modules, for employment and for making a new contribution to your field (if you are a PhD student).

Category 3: You must use Generative AI to complete an assessment piece.

For assessments in this category, you are required to use Generative AI, typically for addressing larger or more complex problems or for demonstrating your skills in using AI tools and critically examining their limitations in your area of study. You must still acknowledge how you used Generative AI, to demonstrate academic integrity.

Regardless of what category the assessment falls under, remember to follow the guidance in Section ‘Using Generative AI safely’ and that your assignments must be your own work, spoken or written in your own words and therefore represent your own voice, properly referenced to indicate sources you have used – and GenAI is a source like anything else. You should also look at the Good Academic Practice Collection on OpenLearn.

Whatever the category of the assessment, if you have shared with the university that you have a disability, you may use Generative AI as a reasonable adjustment. (For examples, see items 2.5 and 2.6 of the Academic Conduct Policy.) However, such use must not compromise academic integrity or the assessment’s learning outcomes. For example, if a module asks you to record an audio file to assess your pronunciation, you must not use a text-to-speech system, as that would defeat the task’s purpose. Similarly, if an assessment asks you to create your own digital designs, using an AI image generator would not allow you to demonstrate your skills. In such cases, if your disability would impact completing the task, the module team is likely to offer an alternative assessment. Please consult your module website or contact your tutor when in doubt about the use of Generative AI as a reasonable adjustment for assessment.

4.1 Acknowledging Generative AI

If the assessment falls into Category 2 or 3, you must acknowledge the use of Generative AI. Unless the assessment tells you otherwise, you don’t need to report the use of Generative AI to correct spelling and grammar mistakes or as a reasonable adjustment, and you should report other uses as follows.

- Reference all AI-generated outputs, using the Generative AI (Harvard) style. Use of third-party material (including AI outputs) in assessment without citing it constitutes plagiarism, which is an academic conduct offence.

- Summarise how you used Generative AI in an Appendix that describes your conversation with the tool and what you did with its outputs. Put your prompts in quotation marks and cite the conversation as personal communication. For example,

For Question 4, I asked for “3 arguments in favour of and 3 against [topic] in 150 words” (Gemini, 2024). I expanded two arguments and submitted a draft, asking the tool to “critique it for clarity and style”. It suggested adding examples for clarity, which I did.

In addition, keep a record of the conversation, so that you can provide it to your tutor if asked for it. Most tools automatically save the conversation until you delete it.

In summary, do not present AI-generated work as if it were your own; instead, show how you used Generative AI to work with you and not for you.

Generative AI can be a powerful aid to your studies, but it also reduces opportunities for learning if relied upon too much. You may want to ask yourself routinely the following questions, suggested by Jennie Blake, Head of Learning and Teaching at the University of Manchester Library:

- Why am I considering using a Generative AI tool? What do I hope it will do?

- Am I losing out on key learning by using it in this way? What should I do to make sure I learn what I need to?

- What are the risks of using the tool like this and what else should I think about?

- Having used the tool, what skills have I acquired which I did not have before?

- What is my plan for using these skills/the tool now/next?

For example, as part of what else to think about in the third point, you may reflect on how much time you spend with Generative AI (including rewriting prompts and acknowledging its use) and whether it leads to improved productivity and outcomes.

We will review this guidance annually, to ensure it reflects developments in Generative AI in education. If you have any questions not addressed by this guidance, you can either:

- If you are a taught student, check your module and qualification websites for any additional guidance, before contacting your tutor or the Student Support Team.

- If you are a research student, check the Position Statement and Guidance on Generative AI before contacting the Graduate School.

Guidance last updated 15 July 2025.